In our last blog post about zero downtime Postgres migrations, we shared how we set up this process on the open-source tool, Bucardo. To recap, we outlined four necessary steps to doing this right:

- Bring up the new database

- Migrate data live as it changes in the current database over to a new database instance without data loss.

- Switch all the clients over to the new database

- Stop the synchronization process and sunset the old database.

This post dives into how we switched all our clients to a new database without any noticeable downtime.

What problem are we trying to solve?

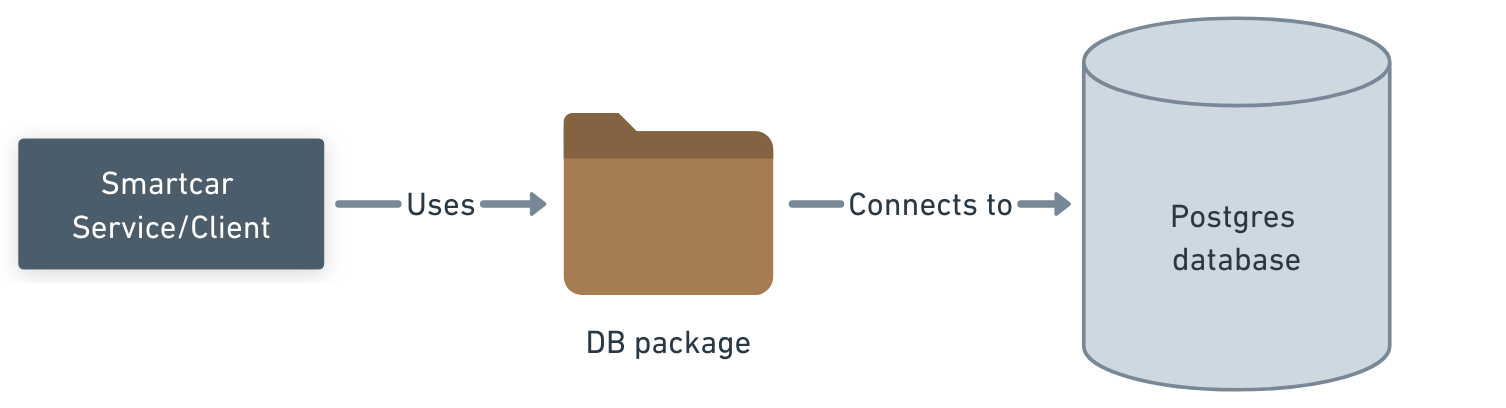

Smartcar has several clients that use the Postgres database. However, we use a centralized repository as a database package for all our dependent clients. This database package is in charge of actually creating and managing connections to the database and has all the configurations required to connect to the databases for all environments. That being said, our current architecture looks like this:

With the architecture above, it would only be possible to switch all our services to the new database with downtime. The process would include:

- Updating the DB package so it contains connection details for the new database.

- Releasing a new version of the DB package.

- Updating the DB package dependency for all Smartcar services.

- Redeploying the services with the updated dependency.

On paper, it sounds simple, but we have multiple services that connect to the DB — which means all systems would have to cutover simultaneously, which is practically impossible.

This is where we slightly modified our DB package so we could switch all connections over to the new database dynamically without any package updates or deployments.

The solution: Dynamic connection configurations

We added a few lines of code in our DB package that would help us dynamically update the database connection configurations through any source — like a configuration management tool or, in our case, a separate database. To summarize, this is what the new code did:

- Instead of having a single connection configuration, we added two blocks of configurations, 'red' and 'black.' In addition to this, we also had one extra configuration named 'currentConfig' to tell us which of the two blocks is the current connection object

- The code that used to previously create DB connections now has an infinite loop that connects to a DynamoDB on AWS after a specified interval to check if the current configuration value has changed. If the code finds a change, it will close down all existing connections to the DB and create new ones with the new configurations.

We also added a few other niceties to the process:

- An additional test color configuration that will test a given color configuration when activated instead of switching the connection.

- An interval (TTL) in seconds configuration that defines the interval value for the infinite loop. For example, we can now control how frequently the code should look up Dynamo for configuration changes.

- Extensive logging when the loop checks for configuration changes. When configuration changes are pushed (like the current config color or the interval value), we would log which service gets the changes and if the changes were successful.

Based on the updates above, our current architecture looks like this:

Now, all we had to do was ensure that the new connection details were inserted in Dynamo DB and that the interval value was shortened so it was small enough for the change to take over quickly.

The unexpected surprises (a.k.a what we learned)

When our systems were ready to switch the database over dynamically, we ran through the complete list of migration steps a few times.

We learned a few things along the way to make our process better, faster, and cleaner.

- Auto-generated sequences: We discovered that Bucardo was not syncing up auto-generated ID (Serial type) columns. For example, a table with an auto-generated ID would copy over values but not necessarily update the sequence to the latest value. We’re investigating this problem further, but for now, we’ve added an item to manually update the sequences in our Bucardo setup steps.

- Services are scaled: We have multiple instances of each of our services running to support high volumes of requests, but the configuration changes through the DB package only occurred when an attempt was made through a given instance of the service. Instead of figuring out how to trigger an update by making requests to individual services, we decided to scale down our services to one instance for a few minutes when the config switch was being done.

- Deployment failure post-migration: One of our service's deployments failed during our first run, causing the TTL to be stuck at a 30-second loop for a long time. So we added an additional step of updating the TTL to a day after switching databases so the check isn’t happening every 30 seconds, even if the deployment fails.

Moving forward with repeatable processes

All the trial and error finally led to us having a strong, repeatable process for our database upgrades. In fact, we’ve already done three upgrades with it!

Even though we’re certain that this approach to migration will result in no downtime, we still continue to inform our users about database maintenance and give them a window where our systems could return errors. This is done solely to accommodate any unexpected events. We hope you keep this in mind too if you’re looking to try this out!

We’re looking forward to sharing more learnings with the developer community! Subscribe to our newsletter below to stay tuned to our blog and to share your thoughts on developer productivity or all things connected cars 🤓

Have a cool idea you want to explore with the Smartcar API? Head on over to our docs and create a developer account to retrieve your API credentials and get started with the integration!